Why explainable AI might be useful for you

“Why would I want explainable AI?”

When I bring up the topic of explainable AI, I usually don’t get this kind of response. Most people have some idea that there’s a potential danger with respect to AI and that inspection of what goes on in a model can be useful to deal with that. Often though, they leave it at that and prefer the better performance of a black box.

However, explaining what your model does or how it comes to a particular decision is not just about danger. There might be a hidden “performance” boost in inspecting the decision making!

🐛

In more traditional software development, it is very common to include logging and debug information into your programs. You want to see what your webserver is doing to see whether it’s functioning properly. You want to see how one mailserver is talking to another (for example to determine that the reason your mail is slow to arrive, is because you’ve enabled a particular feature in the configuration).

📉

Of course, while you’re training your model, you can look at the training and validation loss. That shows you how the optimisation of your model is going. But, it’s a surface level inspection, and only helps during the training/validation phase

🧐

Having methods to explain what’s happening in a model is very useful during all phases of development. It can help you detect mistakes in your data processing or feature extraction. And while running in production, you can sample decisions and inspect whether or not the model is doing something weird. This can help with data-drift and so on.

💃

So, from a performance standpoint it already appears useful to be able to introspect your models. But there’s another benefit, especially if you include humans in the loop: It provides trust in the decisions (something that ChatGPT still needs to earn… 😉) and it provides context in which to aid the human decision making.

In summary, explainable AI can not only provide insight into what’s going on in a model, but it can also lead to performance gains, bug detection and improved human trust. How are you employing explainable AI? And are you actually looking at the explanations of your model?

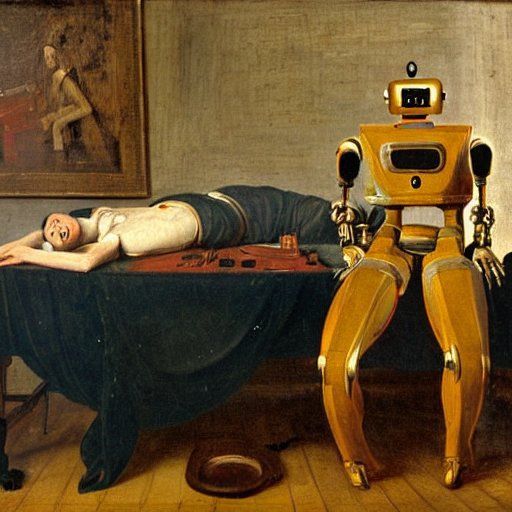

A robot inspecting a human, image created with Stable Diffusion

(Also posted on my LinkedIn feed)