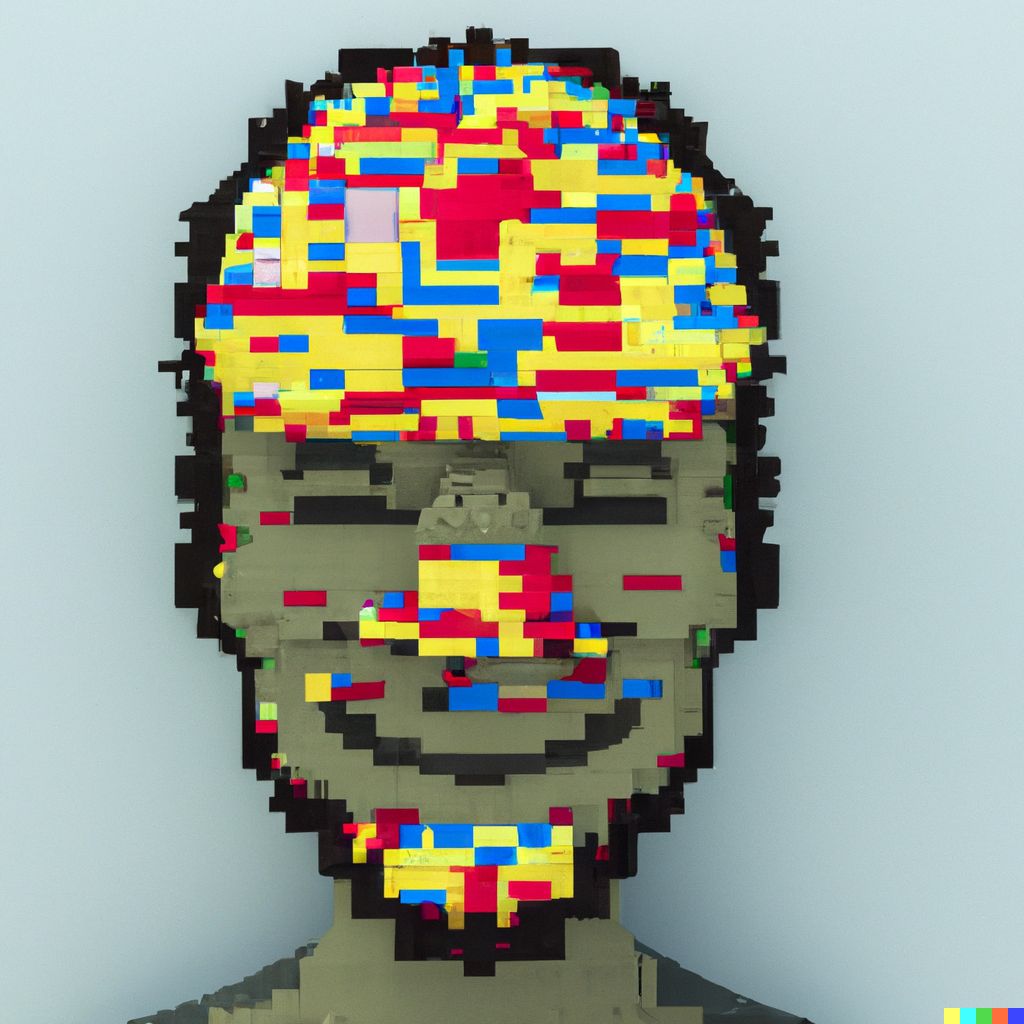

Embeddings are like Lego bricks for AI

ChatGPT is making all the headlines and every news-outlet is covering this phenomenon. Meanwhile, other advancements might be even more exciting. No, it’s not GPT-4 or the latest image generation tool. OpenAI released a new GPT-3 embedding model that you can access through their APIs. This is exciting for developers of AI applications such as search engines and text classifiers! Let me explain.

ChatGPT is making all the headlines and every news-outlet is covering this phenomenon. Meanwhile, other advancements might be even more exciting. No, it’s not GPT-4 or the latest image generation tool. OpenAI released a new GPT-3 embedding model that you can access through their APIs. This is exciting for developers of AI applications such as search engines and text classifiers! Let me explain.

An embedding model can be used to transform text to numbers. This is useful to determine the similarity between two pieces of text, but can also serve as input for other machine learning models. For example, you can train a model that determines the sentiment of a tweet. The new model, “ada-002”, is as good as the previous best model, “davinci-001”, but is much faster and a lot cheaper. They even increased the maximum size of each piece of text that you can put into the model, which is useful if you’re dealing with longer documents.

Even if the advancement of the field with ChatGPT is huge, advancing the embedding space is much more practically relevant. Especially for developers and machine learning engineers. This is because these embedding spaces are building blocks. A chat interface is cool, and you can build some interesting projects on top of it, but improving the building blocks that everyone can use, means that engineers can build all kinds of applications. Out of the box, you can use these embeddings for text-similarity and search. But, you can also use embeddings for clustering, anomaly detection and much more.

(I generated the image using DALL﹒E2, with the prompt “A photo of a human brain made out of lego bricks digital art high quality”. Notice how they are not really lego bricks: They are surprisingly hard to generate!)

(Also posted on my LinkedIn feed)