A parable of an elephant and Random Forests

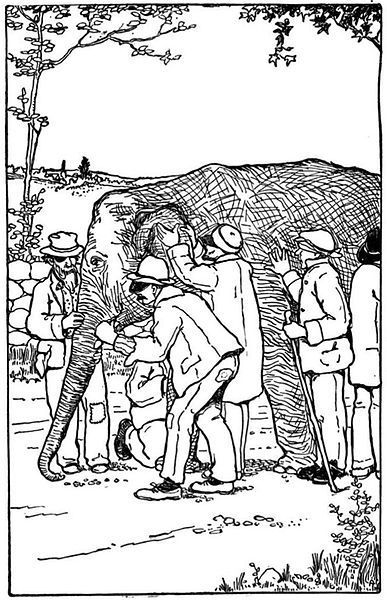

What do forests, a group of blind men, and an elephant have in common? This is a quick story about one of my favourite machine learning tools!

🐘

Do you know the parable of the blind men and an elephant? It’s a story where several blind men come across an elephant, and each feel a different part of the animal. One touches the side, a leg, it’s trunk. They try to get a complete picture by describing each individual component to each-other but find it hard to believe what the other is describing. Ultimately, they fail to form a coherent image.

🌳

Random Forest, a popular type of machine learning classifier, is like the group of blind men, but it actually does manage to get a useful representation. A Random Forest is made up of many Decision Trees, that are all trained on different random sets of your training data and features. Decision trees are not generally regarded as a strong classifier. Therefore, it seems counter-intuitive to take a smaller set of data and features. Shouldn’t you use a stronger classifier when you have less data available?

🗳️

Because you can combine several hundred decision trees, each individual tree sees a different part of the problem (like the blind men!). The final output of the Random Forest comes from combining the classification of each of these trees to form the completed picture. That’s like asking the group of men to come up with one final answer. You just ask for the most common vote (“I felt an elephant”, “I felt a tree trunk”, …), but there are other combining schemes possible.

🧰

This surprising effectiveness of randomness is what makes Random Forests a treasured tool in my machine learning tool box. What’s your favourite (non-neural net) machine learning tool? Share your thoughts in the comments on my LinkedIn page!

(Also posted on my LinkedIn feed)